The risks of ChatGPT for identity fraud

Mike Simpson

28th May, 2023 3 min read

ChatGPT, and Generative AI systems more broadly, can pose significant risks to Identity and Access Management (IAM) systems. These risks arise mainly from the model’s ability to generate highly convincing and contextually appropriate responses, which can be exploited by malicious actors. To mitigate these vulnerabilities, it is essential to follow best practices such as implementing multi-factor authentication, regularly updating and patching the IAM software, conducting security assessments and audits, enforcing strong password policies, and providing security awareness training to users.

Fraud prevention analysts are overwhelmed with work as the variety of the evolving nature of bot-based and synthetic identity fraud proliferates globally. Their jobs are so challenging because the models they’re using aren’t designed to deal with synthetic identities and the rise of AI. ChatGPT has escalated the magnitude of the problem.

There are 3 significant dangers of ChatGPT for identity fraud.

1. Social Engineering & Phishing

GPT models can be used to launch sophisticated social engineering attacks. By impersonating a trusted entity or individual, the model can trick users into revealing private information such as usernames, passwords, or authentication credentials. It could also deceive them into visiting malicious websites or downloading malware leading to unauthorized access to systems and data.

While phishing has always been a danger, GPT models give fraudsters the ability to compose emails that are much more difficult to discern from an authentic message from a trusted source. The extract below is an example of ChatGPTs response for an email instructing employees to install software for a critical security update. Any malicious actor can now create fake emails that are very difficult to discern from authentic correspondence.

TrendMicro also advises to be on the lookout for sites that propose new GPTs that are supposedly superior to ChatGPT. The list of suspect sites is growing rapidly and includes:

– Openai-pro.com

– Pro-openai.com

– Openai-news.com

– Openai-new.com

– Openai-application.com

These phishing sites operate in the same way: a phishing email arrives in your inbox, promising a new AI chatbot that is supposedly superior to ChatGPT. These phishing sites often make a request for service permissions and if the user complies, a trojan will be downloaded onto the device, at which point the cybercriminal can steal personal credentials.

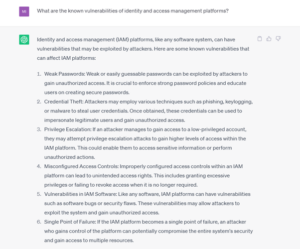

2. Exploitation of Knowledge

GPT models have access to a vast amount of information, including technical details and potential vulnerabilities of various systems. Malicious actors use GPT models to gain insights into potential weaknesses in identity and access management services. This information is then used to exploit vulnerabilities and gain unauthorized access or excessive permission.

3. Synthetic Identities

GPT models can assist in creating realistic fake identities or forging identity-related documents. Synthetic identities are sometimes created by buying stolen ID documents from the dark web and then using AI to insert a different face on the ID document. Creating deepfake faces and videos is simple using GPT models such as DALL-E, as can be seen from the morphing of the single authentic image below.

Even advanced identity verification services have difficulty keeping up with these elaborate fakes. Moreover, government databases that record the details of identity documents are no longer reliable as the ultimate version of the truth as many of the ID document images on the dark web have never been reported as lost or stolen.

The net result is these synthetic identities are accepted as authentic by organizations and the fraudulent actor gains access to sensitive systems or data, bypassing authentication and identity verification processes.

How to mitigate the risk?

To mitigate these vulnerabilities, it is essential to follow best practices such as implementing multi-factor authentication, regularly updating and patching the IAM software, conducting security assessments and audits, enforcing strong password policies, and providing security awareness training to users.

If you would like more information, reach out to Truuth for a demo of our deep-fake detection and risk-based MFA solutions.

Next article

VOI mitigates insurance fraud

ChatGPT, and Generative AI systems more broadly, can pose significant risks to Identity and Access Management (IAM) systems. These risks arise mainly from the model’s ability to.